Harvesting with active perception for open-field agricultural robotics

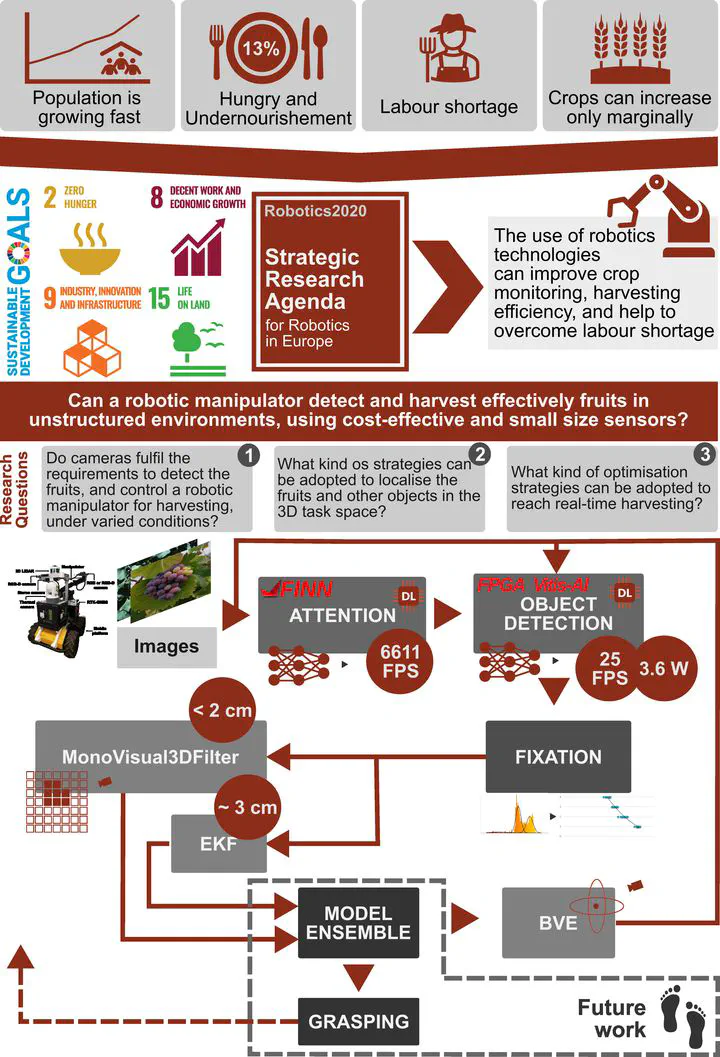

Graphical Abstract for the proposed strategy addressed on this PhD Thesis

Graphical Abstract for the proposed strategy addressed on this PhD ThesisThe global population is rapidly increasing, leading to significant challenges in hunger and undernourishment, now affecting ≥12.9 % of people worldwide. With agricultural land being finite and only marginally expandable, it’s crucial to enhance productivity by adopting precision and intelligent farming techniques. Robotics technology emerges as a key solution to improve crop monitoring and harvesting efficiency, thus addressing this pressing societal issue, according to the Agenda for Robotics in Europe. The International Federation of Robotics (IFR) also notes that robots can play a significant role in meeting these challenges, contributing to the Sustainable Development Goals (SDGs) set by the United Nations (UN) in Agenda2030. These goals include, between others: zero hunger; decent work and economic growth; industry, innovation, and infrastructure; and life on land (goals 2, 8, 9, and 15, respectively). In robotics for agriculture, there has been a focus on developing methods to detect and segment fruits or branches in open-field environments. Despite these efforts, many initiatives face challenges due to low visibility and occlusions. The exploration of active perception as an alternative approach to tackle these challenges has been minimal. A comprehensive review was conducted to assess the current state-of-the-art, highlighting the limitations and potential of active perception for efficient fruit detection and harvesting. Throughout this thesis, we researched the application of advanced visual perception systems powered by deep learning for identifying fruits and other objects in agricultural scenes. We experimented with various deep learning models, including YOLO and SSD algorithms, specifically SSD MobileNet v2, SSD Inception v2, and SSD ResNet 50. These models were optimised on specialised hardware to ensure reliable, near-real-time performance. The models achieved detection F1 scores around 60 % for tomatoes and grape bunches, with acceleration techniques boosting detection speeds up to 25 FPS on FPGAs. Further experiments leveraging the FGPAs’ programmable logic enabled us to achieve object detection rates at 6610.94 FPS using a MobileNet v2 classifier. For the estimation of fruits’ 3D positions using monocular cameras, we developed knowledge based algorithms, namely the MonoVisual3DFilter, and the Best Viewpoint Estimator (BVE) + Extended Kalman Filter (EKF). The MonoVisual3DFilter utilises detection data combined with histogram filters to infer fruit positions, while the BVE + EKF method iteratively corrects distance estimates to fruits based on similar data. Both approaches yielded accurate results, with estimation errors within the range of 1 cm to 3 cm. We have made the datasets and some of the code developed during this project available to the public, adhering to the european open data principles, via ZENODO and GitLab platforms.