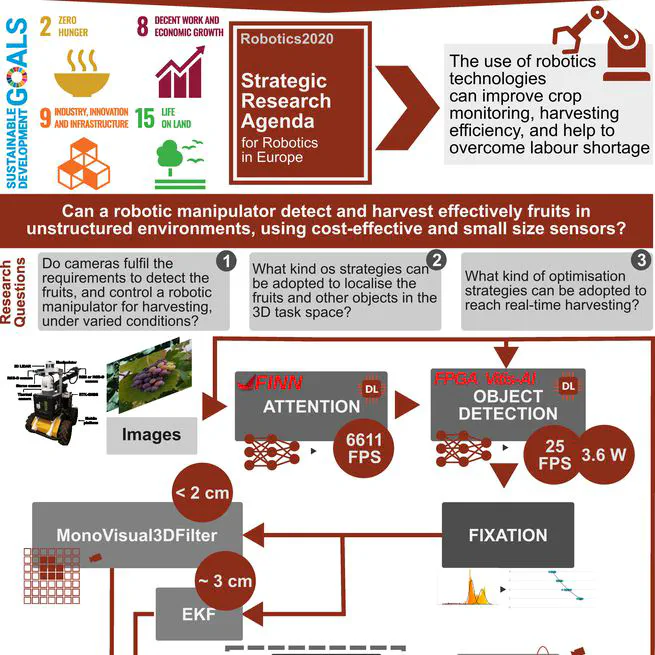

The global population is rapidly increasing, leading to significant challenges in hunger and undernourishment. With agricultural land being finite and only marginally expandable, it’s crucial to enhance productivity by adopting precision and intelligent farming techniques. Robotics technology emerges as a key solution to improve crop monitoring and harvesting efficiency. Throughout this thesis, we researched the application of advanced visual perception systems powered by deep learning for identifying fruits and other objects in agricultural scenes. We experimented with various deep learning models, including YOLO and SSD algorithms. These models were optimised on specialised hardware to ensure reliable, near-real-time performance. The models achieved detection F1 scores around 60% for tomatoes and grape bunches, with acceleration techniques boosting detection speeds up to 25 FPS on FPGAs. Further experiments leveraging the FGPAs’ programmable logic enabled us to achieve object detection rates at 6610.94 FPS using a MobileNet v2 classifier. For the estimation of fruits’ 3D positions using monocular cameras, we developed knowledge based algorithms, namely the MonoVisual3DFilter, and the Best Viewpoint Estimator (BVE) + Extended Kalman Filter (EKF). Both approaches yielded accurate results, with estimation errors within the range of 1 cm to 3 cm. We have made the datasets and some of the code developed during this project available to the public, adhering to the european open data principles, via ZENODO and GitLab platforms.

Oct 21, 2024

Develop and control the trajectory of omnidirectional robots using classic PID control and Model Predictive Control (MPC)

Apr 27, 2016